flowchart LR

p[[Plan]]

sim[[Simulate]]

a[[Acquire]]

e[[Explore / Analyze]]

s[[Share]]

p --> sim --> a --> e --> s

Introduction + Telling Stories with Data

SOCI 3040 – Quantitative Research Methods

Department of Sociology, Memorial University

January 7, 2025

👋 Welcome to SOCI 3040!

My name is [John, Professor McLevey, Dr. McLevey] (he/him).

Professor & Head of Sociology

New to Memorial after 11 years at University of Waterloo

agenda.

- Who are you? Background? Expectations?

- What is this course about?

- What will we do? Where is everything?

Who are you?

Who are you?

Do you have any previous quant courses / experience?

What are your expectations for this course?

What is this course about?

3040 Calendar Description

SOCI 3040, Quantitative Research Methods, will familiarize students with the procedures for understanding and conducting quantitative social science research. It will introduce students to the quantitative research process, hypothesis development and testing, and the application of appropriate tools for analyzing quantitative data. All sections of this course count towards the HSS Quantitative Reasoning Requirement (see mun.ca/hss/qr). (PR: SOCI 1000 or the former SOCI 2000)

This Section (001)

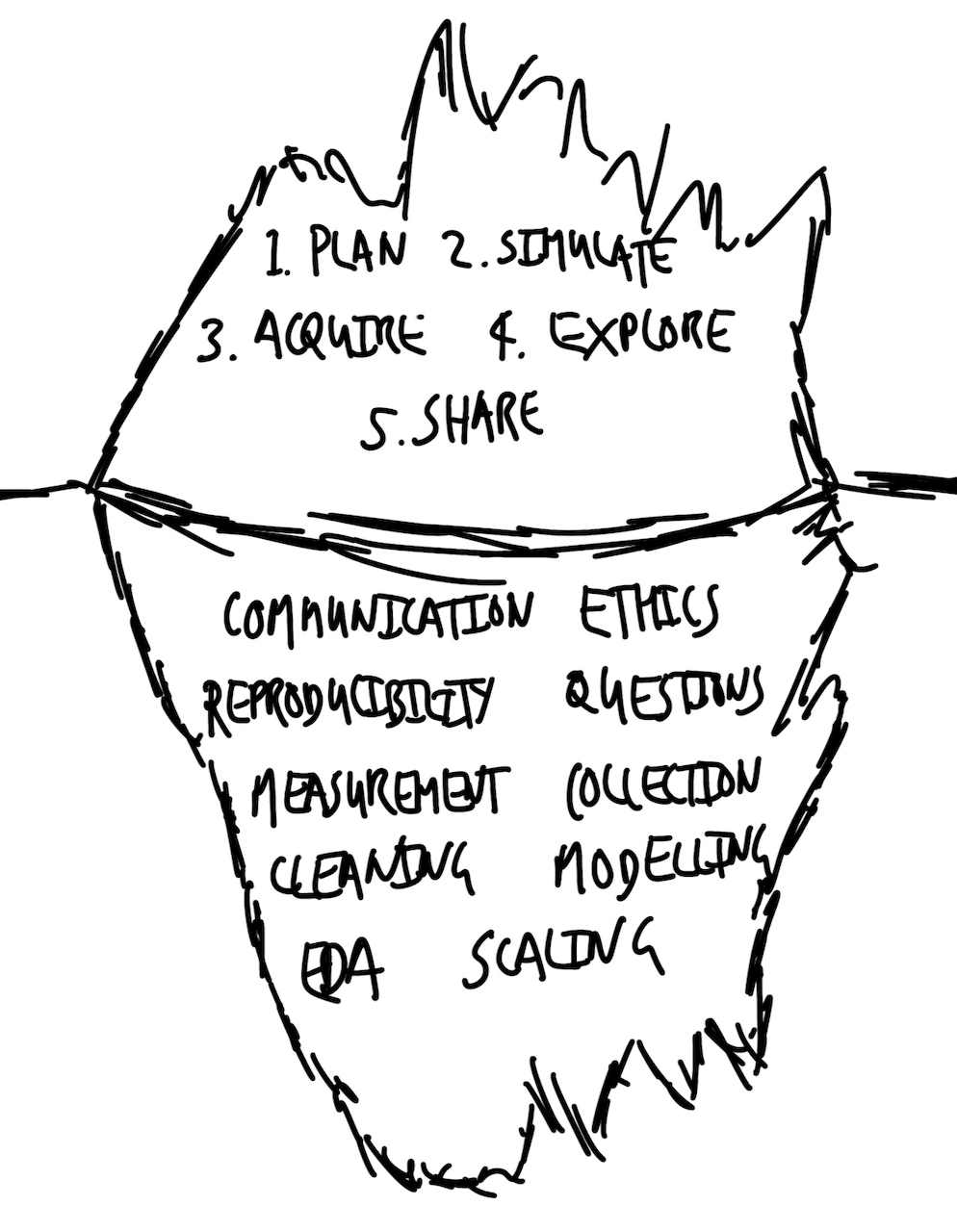

This section of SOCI 3440 is an introduction to quantitative research methods, from planning an analysis to sharing the final results. Following the workflow from Rohan Alexander’s (2023) Telling Stories with Data, you will learn how to:

plan an analysis and sketch your data and endpoint

simulate some data to “force you into the details”

acquire, assess, and prepare empirical data for analysis

explore and analyze data by creating visualizations and fitting models

share the results of your work with the world!

This Section (001)

“A lack of clear communication sometimes reflects a failure by the researcher to understand what is going on, or even what they are doing.” (Alexander 2023)

Core foundation of quantitative research methods

Bridge between analysis and understanding

Essential skill for modern researchers

This Section (001)

You will use this workflow in the context of learning foundational quantitative research skills, including conducting exploratory data analyses and fitting, assessing, and interpreting linear and generalized linear models. Reproducibility and research ethics are considered throughout the workflow, and the entire course.

Common Concerns & Key Questions

- What is the dataset? Who generated it and why?

- What is the underlying process? What’s missing or has been poorly measured? Could other datasets have been generated, and if so, how different could they have been to the one that we have?

- What is the dataset trying to say? What else could it say?

- What do we want others to see? How do we convince them?

- Who is affected? Are they represented in the data? Have they been involved in the analysis?

Components of the Data Storytelling Workflow

Core Workflow Components

Plan, Simulate, Acquire, Explore / Analyze, Share

Plan and Sketch

deliberate, reasoned decisions

purposeful adjustments

even 10 minutes of planning is valuable

Core Workflow Components

Plan, Simulate, Acquire, Explore / Analyze, Share

Simulate Data

Forces detailed thinking

Clarifies expected data structure and distributions.

Helps with cleaning and preparation

Identifies potential issues beforehand.

Provides clear testing framework

Ensures data meets expectations.

“Almost free” with modern computing

Provides “an intimate feeling for the situation” (Hamming [1997] 2020)

Core Workflow Components

Plan, Simulate, Acquire, Explore / Analyze, Share

Acquire and Prepare

Often overlooked but crucial stage

Many difficult decisions required: data sources, formats, permissions.

Can significantly affect statistical results (Huntington-Klein et al. 2021)

Common challenges: quantity (too little or too much data) and quality

Core Workflow Components

Plan, Simulate, Acquire, Explore / Analyze, Share

Explore and Understand

Begin with descriptive statistics

Move to statistical models

Remember: Models are tools, not truth, and they reflect our previous decisions, data acquisition choices, and cleaning procedures.

Core Workflow Components

Plan, Simulate, Acquire, Explore / Analyze, Share

Share Findings

High-fidelity communication is essential

Document all decisions

Build credibility through transparency

Include:

What was done

Why it was done

What was found

Weaknesses of the approach

Quantitative Research Essentials

Quantitative Research Essentials

Communication

Reproducibility

Ethics

Questions

Measurement

Data Collection

Data Cleaning

Exploratory Data Analysis

Modeling

Scaling

Communication (Most Important)

“Simple analysis, communicated well, is more valuable than complicated analysis communicated poorly.” (Alexander 2023)

“One challenge is that as you immerse yourself in the data, it can be difficult to remember what it was like when you first came to it.” (Alexander 2023)

- Write in plain language

- Use tables, graphs, and models effectively

- Focus on the audience’s perspective

Reproducibility

Everything must be independently repeatable.

Requirements:

Open access to code

Data availability or simulation

Automated testing

Clear documentation

Aim for autonomous end-to-end reproducibility

Ethics

“This means considering things like: who is in the dataset, who is missing, and why? To what extent will our story perpetuate the past? And is this something that ought to happen?” (Alexander 2023)

Consider the full context of the dataset (D’Ignazio and Klein 2020)

Acknowledge the social, cultural, and political forces (Crawford 2021)

Use data ethically with concern for impact and equity

Questions

Questions evolve through understanding

Challenge of operationalizing variables

Curiosity is essential, drives deeper exploration

Value of “hybrid” knowledge that combines multiple disciplines

Comfort with asking “dumb” questions

Measurement

“The world is so vibrant that it is difficult to reduce it to something that is possible to consistently measure and collect.” (Alexander 2023)

Measuring even simple things is challenging (e.g., measuring height: Shoes on or off? Time of day affects height. Different tools yield different results). More complex measurements are even harder. How do we measure happiness or pain?

Measurement requires decisions and is not value-free. Context and purpose guide all measurement choices.

Data Collection & Cleaning

“Data never speak for themselves; they are the puppets of the ventriloquists that cleaned and prepared them.” (Alexander 2023)

Collection determines possibilities

What and how we measure matters.

Cleaning requires many decisions

E.g., Handling “prefer not to say” and open-text responses.

Document every step

To ensure transparency and reproducibility.

Consider implications of choices

E.g., ethics, representation.

EDA, Modeling, & Scaling

Exploratory Data Analysis (EDA)

Iterative process

Never truly complete

Shapes understanding

Modeling

Tool for understanding

Not a recipe to follow

Just one representation of reality

Statistical significance \(\neq\) scientific significance

Statistical models help us explore the shape of the data; are like echolocation

Scaling

Using programming languages like R and Python

Handle large datasets efficiently

Automate repetitive tasks

Share work widely and quickly

Outputs can reach many people easily

APIs can make analyses accessible in real-time

How Do Our Worlds Become Data?

How Do Our Worlds Become Data?

To a certain extent we are wasting our time. We have a perfect model of the world—it is the world! But it is too complicated. If we knew perfectly how everything was affected by the uncountable factors that influence it, then we could forecast perfectly a coin toss, a dice roll, and every other seemingly random process each time. But we cannot. Instead, we must simplify things to that which is plausibly measurable, and it is that which we define as data. Our data are a simplification of the messy, complex world from which they were derived.

There are different approximations of “plausibly measurable”. Hence, datasets are always the result of choices. We must decide whether they are nonetheless reasonable for the task at hand. We use statistical models to help us think deeply about, explore, and hopefully come to better understand, our data. (Alexander 2023)

How Do Our Worlds Become Data?

Through skillful

reduction 👨🍳

Embracing the Challenge

Embracing the Challenge

“Ultimately, we are all just telling stories with data, but these stories are increasingly among the most important in the world.” (Alexander 2023)

Telling good stories with data is difficult but rewarding.

Develop resilience and intrinsic motivation.

Accept that failure is part of the process.

Consider possibilities and probabilities.

Learn to make trade-offs.

No perfect analysis exists.

Aim for transparency and continuous improvement.

Key Takeaways

- Data storytelling bridges analysis and understanding

- Effective communication is paramount

- Ethics and reproducibility are foundational

- Ask meaningful questions and measure thoughtfully and transparently

- Data collection and cleaning shape your analysis

- Embrace the iterative nature of exploration and modeling

- Leverage technology to scale and share your work

- Be mindful of the limitations of your data

What will we do? Where is everything?

Brightspace

Course materials website: johnmclevey.com/SOCI3040/

Next class

Before class: Complete the assigned reading

In class: Introduction to R and RStudio